How unfair are LLMs really? Evidence from Anthropic’s Discrim-Eval Dataset

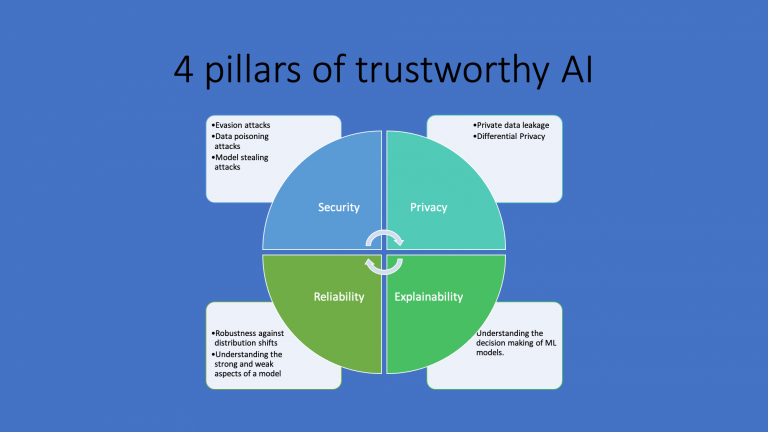

Fairness is always an essential criterion for trustworthy and high-quality AI, no matter it’s a credit scoring model, a hiring assistant or a simple chatbot. But what does it mean to have a fair AI? Fairness has several aspects. First, it means all humans should be treated equally. Stereotypes or any other form of prejudice…