Validaitor is the #1 platform that is designed for enabling trust between AI developers and the society.

The Problem

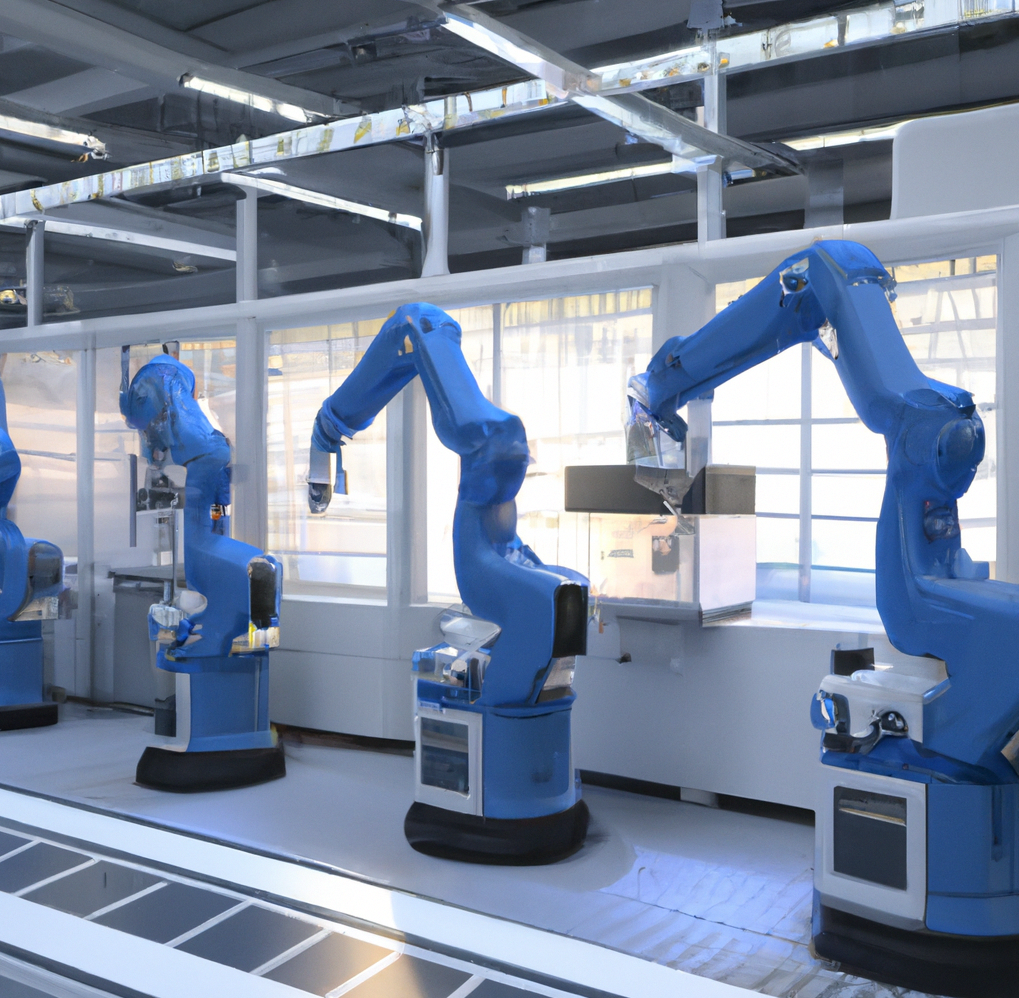

An AI developer wants to put a new version of the AI model that is used in the factories of their customers to detect defect production and alert the maintenance team if necessary. However, the AI developer is not very familiar with how to test the AI model for reliability and security and which methods to apply.

The Challenge

The reliability and security issues of the AI models arise in subtle ways. Detecting the drifts and hacking activities and acting upon with appropriate measures require a good understanding of AI red and blue teaming.

The Solution

VALIDAITOR provides out-of-the-box reliability and security tests that are tailored to the use case. With this holistic evaluation, AI developers better understand the quality of their AI models, the weak spots that need more attention and their model’s vulnerability level to hacking activities.