Who is who in the EU AI Act?

The EU AI-Act is here! Published on March 13th, 2024, this game-changing legislation is carving out a new path in AI governance. From the key players to the strategic interactions, understanding the complexities of this ecosystem is crucial for understanding your role in it. With this post, we start our series on the AI Act by clarifying the “who is who” of the players involved.

AI Act Introduction

The rapid advancement of AI technologies has been transformative, offering opportunities but also raising complex questions about safety, privacy, ethics, and human rights. To address these challenges while fostering innovation, the European Union introduced the AI Act, a landmark proposal for a harmonized AI governance framework across member states. Its primary goal is to ensure AI systems uphold European values and standards, particularly concerning human dignity, freedom, equality, and the rule of law.

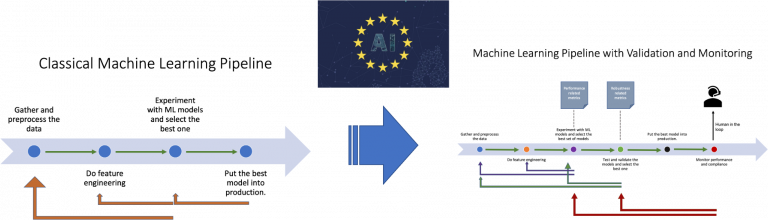

The AI Act introduces a risk-based approach, classifying AI systems based on their potential risks. It regulates high-risk AI systems, which significantly impact people’s safety, rights, and freedoms, with stringent requirements for transparency, data protection, oversight, and accountability.

The Act also prohibits certain AI use cases deemed incompatible with EU values, such as manipulative practices or discriminatory social scoring systems. The following risk pyramid gives a brief introduction into the risk levels. More details, especially regarding high risk systems, will be discussed in upcoming blog posts.

Purpose and Scope

The AI Act is designed to improve the internal EU market’s functioning by creating a uniform legal framework for AI systems. It targets the development, marketing, and use of AI, aligning with Union values to encourage trustworthy AI adoption. This framework is intended to mitigate the potential risks AI poses to health, safety, and fundamental rights, thereby preventing the fragmentation of the internal market due to varying national regulations.

Stakeholders and Their Roles

- European AI Office: The AI Office was established to form the foundation of governance for the European AI governance system. It will become the center of expertise fostering collaboration between member states and the wider community.

- Market Surveillance Authorities: Market surveillance authorities will play a crucial role in ensuring compliance with the regulations. Their primary responsibilities include monitoring of high risk systems, investigation of non-compliance and the enforcement of corrective measures

- Notified Bodies: Notified bodies are third-party organizations designated by the member states to assess and certify the conformity of high-risk AI systems with the requirements set forth in the AI Act.

- AI Providers and Developers: Entities that create AI systems, responsible for ensuring their compliance with the AI Act, including risk assessment and adherence to prohibited AI practices.

- Users and Deployers of AI Systems: Organizations and individuals that utilize AI systems within their operations. They must ensure the systems they use comply with the AI Act’s requirements, especially for high-risk AI applications.

- Consumers and the General Public: The beneficiaries of the AI Act’s protections, which aim to safeguard their fundamental rights and freedoms against the risks posed by AI technologies.

Interactions among stakeholders

Interactions among stakeholders can get complex very quickly, having to account for regulations passed by local authorities as well the general requirements of the AI Act. The following image displays the interactions of the stakeholders – by having high-risk AI systems at the center, including setting the codes of practice, how they are enforced and the obligations that providers and deployers have to fulfil for compliance with the regulations.

Providers vs Deployers

Most entities working in the AI space will fall into one of these two roles and we often encounter confusion about the role of organizations developing AI solutions i.e. based on OpenAI API; The following will sum up their primary responsibilities and key differences:

Providers

Providers are the entities in the ecosystem actually developing and training AI systems. They are responsible for ensuring compliance during the design and development phase of these systems. These include obligations to:

- Establish a risk management system including data governance

- Provide information to user about the system’s capabilities, limitations and risks

- Enable human oversight measures

- Comply with requirements in regards to performance, robustness, fairness etc.

The AI-Act applies to all providers providing their system in the market of the EU, regardless of whether they are established or located inside the Union.

Deployers

Deployers put the systems developed by the providers to use inside applications under their authority. Their primary responsibility lies in the monitoring and safe application of the AI systems they are using. With the obligation to:

- Monitor the operation of the AI system

- Ensure human oversight measures are implemented

- Inform the provider of any serious incident that could impact compliance

Overall providers are responsible for compliant development of AI systems and enabling the deployers to fulfill their obligations of responsible use whereas deployers are responsible for monitoring these systems and using them responsibly.

Many entities developing their own models or customizing existing models to integrate into their systems will likely assume the roles and responsibilities of both providers and deployers.

Compliance with Validaitor

At Validaitor our goal is to help you navigate the complex landscape not only of AI regulations, but also AI quality management, testing and red-teaming. Using our tools you will be able to produce the required information and input for the compliance obligations as well as streamline the reporting process, allowing you to stay compliant with minimal effort.

Stay tuned for more updates regarding the AI Act and other topics related to testing AI.